Rubin Observatory

Vera C. Rubin Observatory will produce an unprecedented astronomical data set for studies of the deep and dynamic universe, make the data widely accessible to a diverse community of scientists, and engage the public to explore the Universe with us.

The goal of the Vera C. Rubin Observatory project is to conduct the 10-year Legacy Survey of Space and Time (LSST).

Vera C. Rubin Observatory will produce an unprecedented astronomical data set for studies of the deep and dynamic universe, make the data widely accessible to a diverse community of scientists, and engage the public to explore the Universe with us.

The goal of the Vera C. Rubin Observatory project is to conduct the 10-year Legacy Survey of Space and Time (LSST).

Partners and sponsors

- This project developed in the nursery of the Transient and Variable Stars Science Collaboration

- The musicians' team on this project are faculty and students at Lincoln University the Nation's First Degree-Granting Historically Black College and University

- The astrophsyicists and data scientists on this team are faculty and students at the University of Delaware

- The Heising-Simons foundation has generously supported this work through the "Leveling the playing field” 2021-2022 grant to the Science Collaborations.

- The “Leveling the playing field” 2021-2022 grant to the Science Collaborations is administered by the Las Cumbres Observatory (LCO)

- Rubin Observatory and the LSST Corporation have generousely supported the participation of Ms. Wells and Dr. Limb to the Rubin Project Community Workshop 2022 to present their work on Rubin Rhapsodies

Rubin Rhapsodies: a project to offer access to the Legacy Survey of Space and Time data through sound

Sonification is the practice of giving an audible representation of information and processes. While visualizations are the traditional means of making data accessible to scientists as well as to the public, sonifications are a less common but powerful alternative. In scientific visualizations, specific data properties are mapped to visual elements such as color, shape, or position in a plot. Similarly, data properties can be matched to sound properties, such as pitch, volume, timbre, etc. In order to successfully convey information, both visualizations and sonifications need to be systematic, reproducible, and avoid distorting the data.

The goal of our collaboration between scientists and musicians is to establish a standard for the sonification of the complex Rubin data and to advocate for the implementation of Rubin sonifications to expand access to the LSST data

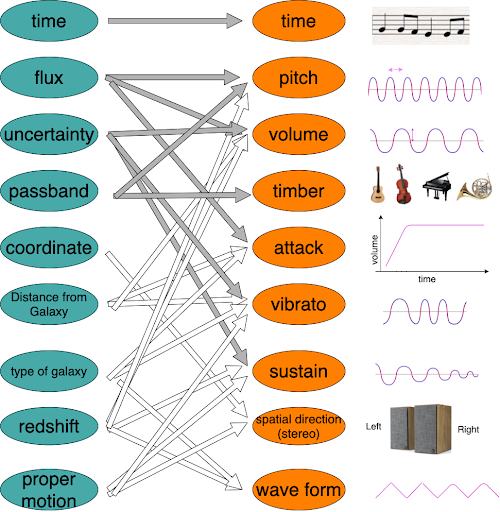

Figure 1: Parameter mapping scheme: on the left are LSST time-domain data features,on the right sound parameters (and a graphical representation of their meaning). Arrows represent potential mapping, in gray are mappings tested by our group unsing the PLAsTiCC dataset.

Figure 1: Parameter mapping scheme: on the left are LSST time-domain data features,on the right sound parameters (and a graphical representation of their meaning). Arrows represent potential mapping, in gray are mappings tested by our group unsing the PLAsTiCC dataset.

Examples of discovery through sound are emerging across disciplines (e.g. Tillman, N. T. 2019, and this incredible TED talk by Wanda Diaz Merced). While sonification is generally considered a potentially useful alternative and complement to visual approaches, [it] has not reached the same level of acceptance. (de Campo 2009). Sonifications of scientific data are scarce, despite the fact that the differences in the processing of visual and auditory stimuli imply that sonification, by emphasizing complementary relationships in the data, can reveal properties that might be missed in visualizations. Compared to the human eye the ear is, for example, orders of magnitudes more sensitive to changes in signal intensity (Treasure 2011).

Integrating sonification in the workflow of LSST data analysis serves two equally important purposes. On one end, it is an issue of equity and research inclusion. Rubin’s commitment to equity would not be complete if it did not enable Blind and Visually Impaired (BVI) users to explore its rich, scientific data. As the Rubin data will be accessed through the Rubin Science Platform (RSP), BVI users will not have the opportunity to use tools for its analysis on their own devices, so the integration of sonification has to happen at the RSP level. Without such efforts, Rubin runs the risk of inadvertently locking BVI users out of the process of scientific discovery. Second, we argue that sonification will enable alternative and complementary ways to explore Rubin data in all its complexity and high dimensionality, and that the Rubin community will benefit tremendously from the extension of the representation space beyond visual elements. Just as Rubin will open up the parameter space for astrophysical discovery with its rich dataset, investment in sonification strategies has the potential to expand the representation space ultimately benefiting the entire scientific community.

But the sound representation of the celestial body is only going to be scientifically effective if a deliberate and clear design scheme for sonification of LSST data able to marry the complexity and richness of this dataset with perceptive effectiveness and clarity is created.

Our team

Collaborators

A number of people have been involved in ideating, conceptualizing, and securing funds for this project including:

- Siegfried Eggl (UIUC, Solar System SC)

- Scott Fleming (STsCI)

- Sara Bonito (INAF, TVS SC)